Author: Martin Purgat

Supervisor: RNDr. Zuzana Berger Haladová, PhD.

Consultant: Mgr. Lukáš Gajdošech

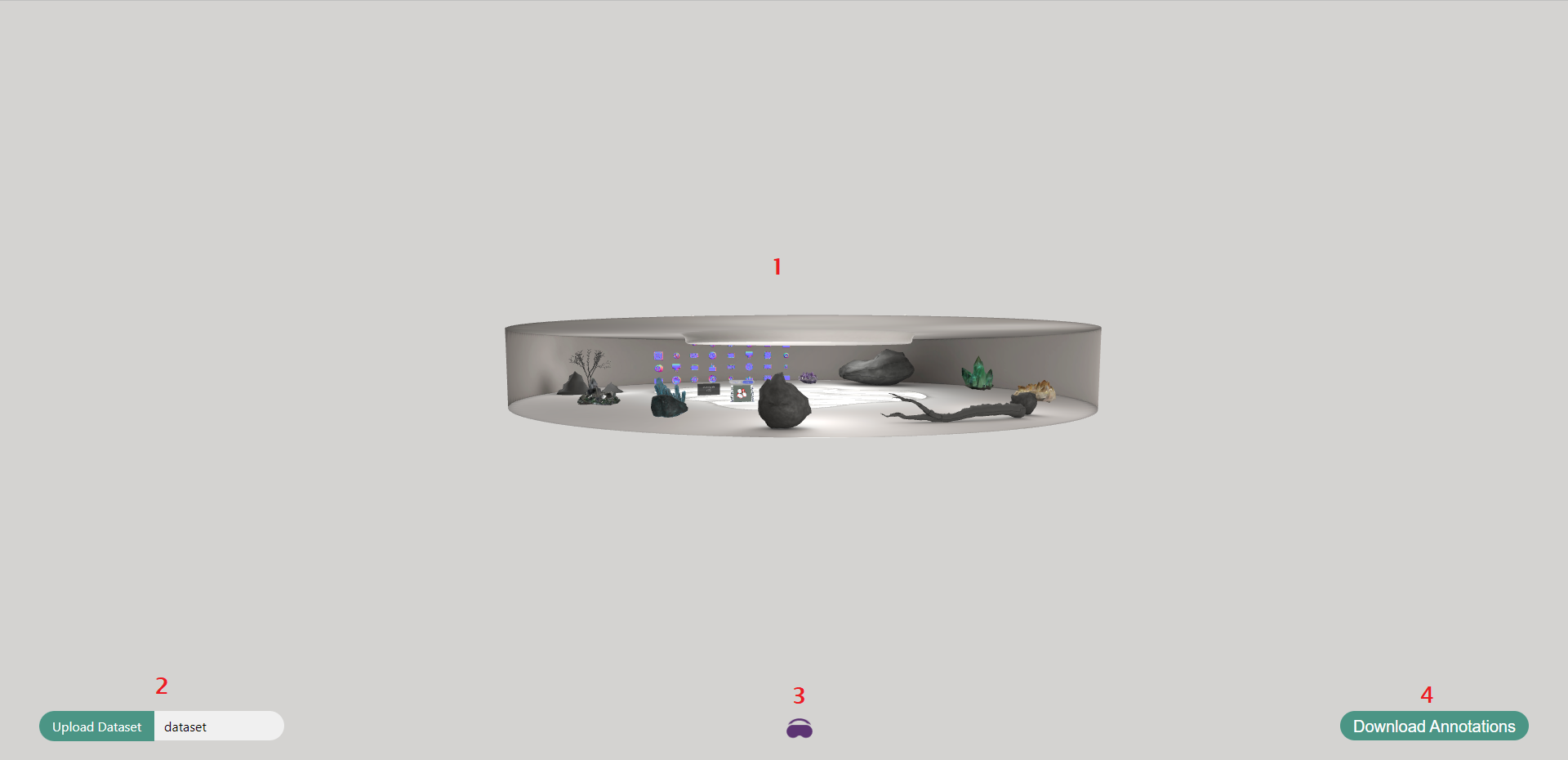

6D pose estimation is a computer vision task that aims to determine the position and orientation of an object in 3D space. It is a crucial step in many robotic applications, such as bin picking, pick and place, or autonomous driving. The goal of this project is to develop a tool for semi-automatic annotation of 6D pose estimation datasets. The tool will be implemented as a web application with a virtual reality interface. The user will be able to load an RGBD point cloud of a scene, CAD models of objects and annotate it by placing the objects right into the point cloud. The user will be able to correct the pose by moving the object with a controller ("virtual hand" metaphor) right in VR. The tool will also allow the user to refine the pose by using human in the loop version of the ICP algorithm. The user will be able to export the annotated point cloud. Finally, we will conduct a user study to evaluate the usability of the tool.

Thinking about doing some VR related project. I met with my supervisor and we discussed some project ideas. Eventually, we decided to do a project related to 6D pose annotation.

Started working on the project. I did some research on existing 6D pose estimation datasets and annotation tools. I also started learning about point cloud processing algorithms and frameworks used in this field.

We settled on final project goals. I started to play around with libraries and frameworks I was planning to use in the project. I also started to learn about VR development.

I took a break from the project to work on some other projects. Also, hey, it's Christmas time!🎄🎁

I created a web page for the project and started thinking about the structure, visuals, and general design of the application.

I started implementing the application. I decided to use Vue.js and Three.js. Created my first dynamic VR scene!

I was implementing the basic features and functionality of the application.

I started working on some advanced features, such as experimenting with human in the loop ICP.

We conducted a user study to evaluate the usability of the application.

It also started writing the final text.

Thesis submitted! Thoughts and prayers please!🙏

You can read the final text of the thesis here.

All references can be found in the final text of the thesis.