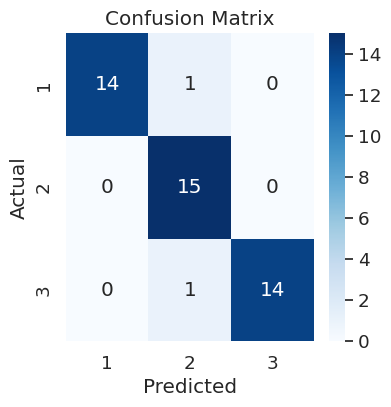

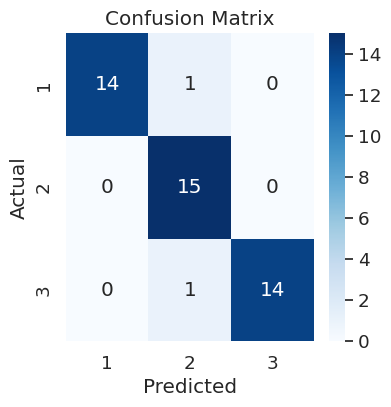

Image results

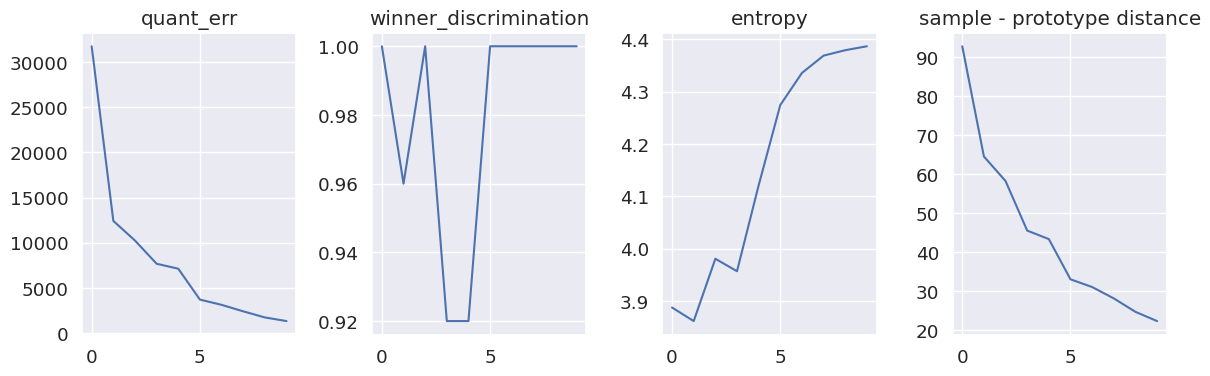

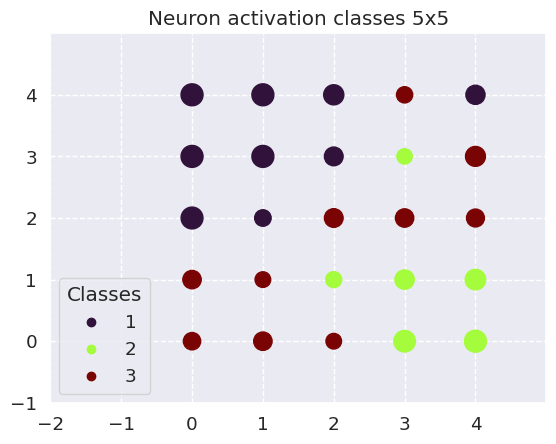

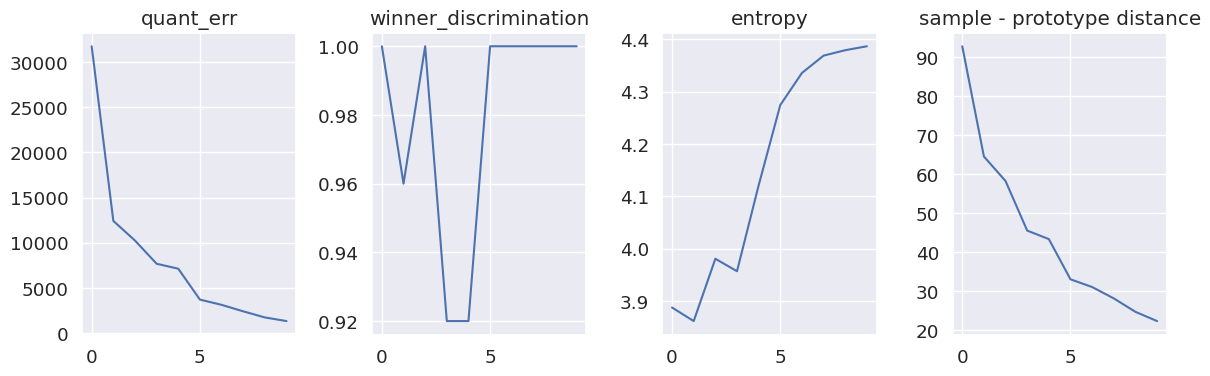

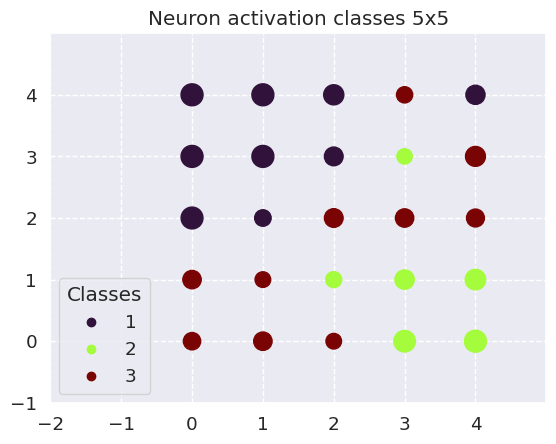

SOM training

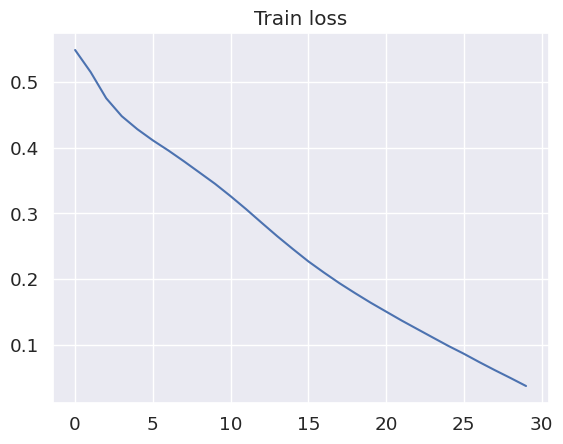

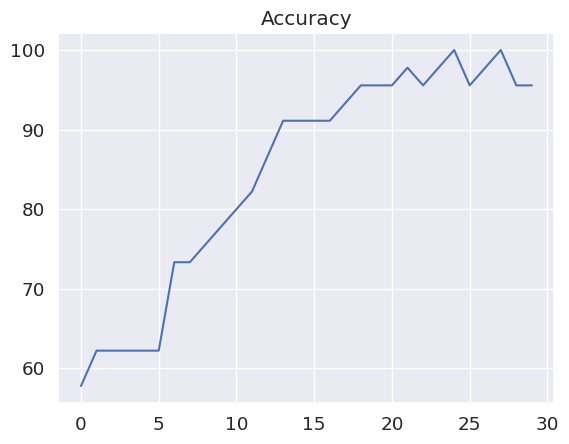

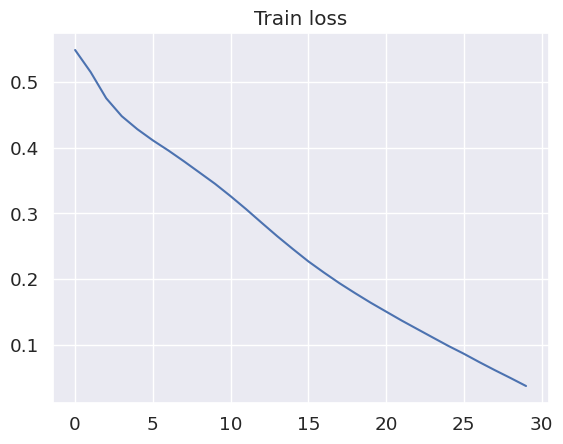

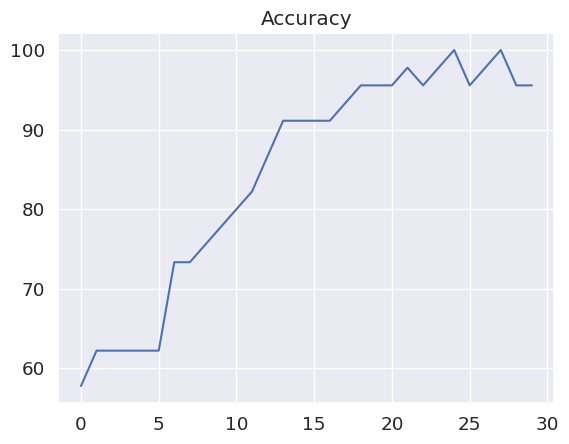

MLP+SOM training

Goal : Study the existing models within semi-supervised learning for categorization with focus on the Temporal Ensembling and the Mean Teacher models. Make an overview of the current state of the art, propose an extension of this semi-supervised model using self-organizing maps and evaluate the new model.

Annotation : These days, Deep neural networks are the most widely used and researched models in machine learning, with application in many different domains. However, training of such models requires an abundance of adequately labeled data and labels for the real world data are scarce. The semi-supervised learning paradigm aims at leveraging this problem via various different techniques that would typically involve capturing and evaluating the distance between the feature vectors of the learned labeled and unlabeled data and learning is based on similarity. This approach is used for example in the popular Mean Teacher model (MT). Self-organizing maps are one of the classical neural network models that do not require the training signal and yet capture relationships among the data presented to the network preserving similarity in a topological fashion. This mechanism could be utilized for improving semi-supervised learning with information coming from the structure of the data or its feature vectors.

| Task | ||

|---|---|---|

| winter semester 2022 | Study literature about MT, BMT experiment | |

| summer semester 2022 | 1. half | Study literature about other models, our model definition, brainstorming on SOM loss, straight-forward implementation MT + SOM (experiments run too long, not possible to fine tune) |

| summer semester 2022 | 2. half | Architecture change (to smaller - Sarmads), still too slow, visualizations and SOM-metrices, writing KUZ article |

| winter semester 2023 | week 1 - 3 | Designing SOM loss, designing experiment for proving SOM loss improve performance, impementation of experiment, running experiment |

| winter semester 2023 | week 4 - 6 | Redefining SOM loss and experiment several times, new implementation and bug fixing |

| winter semester 2023 | week 7 - 8 | Running experiments, recording results |

| winter semester 2023 | week 9 - 11 | Writing chapter about experiment and overview chapter |